Two people were examining the output of the new computer in their department. After an hour or so of analyzing the data, one of them remarked: "Do you realize it would take 400 men at least 250 years to make a mistake this big?" Unknown

I'm a big fan of Riccardo Rebonato. From the book on interest rate models, a required text in my grad school, to the papers he's done on interest rates measures in the "real-world", he's an extremely clear thinker on otherwise murky stuff. I can't recommend more highly his recent book, Plight of the Fortune Tellers. If you or your clients are in the business of making tough financial decisions, it's a must read and enjoyable to boot. Enough gushing (I need payment to go any further ...)

One extremely important concept woven throughout Plight is the difference between the traditional "probability as frequency" concept and the more general Bayesian or "subjective" probability. Probability as a pure frequentist concept is a special case of Bayesian/subjective probabilities that would be appropriate when looking at the likelihood of a head after a coin flip. Outside of a belief the coin is fair, no prior knowledge is necessary to reliably assess the likelihood of such an event. Contrast that with say, the probability that the Jets win the SuperBowl in 2011, or the Republicans retake the House in November, or even that gold goes over $1,500 an ounce by year end. These are all events to which we could also assign a probability, though analyzing purely historical data in a frequentist sort of way will yield few helpful results. We are much more inclined to include and use other relevant information such as the Jets strong defense going into the next season, the anti-incumbent mood of the electorate, and the growth of global money supplies.

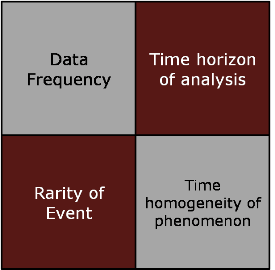

What does this have to do with the use of raw historical data in financial decisions support analytics? A lot. Certain financial questions are better answered using frequentist concepts. Others are far more judgment-based relying on more subjective criteria and professional experience. But how do you know which situations are which? Though no hard and fast rules exist, there are basically four criteria:

Data frequency - The more relevant data you have, the more inclined towards a frequentist approach.

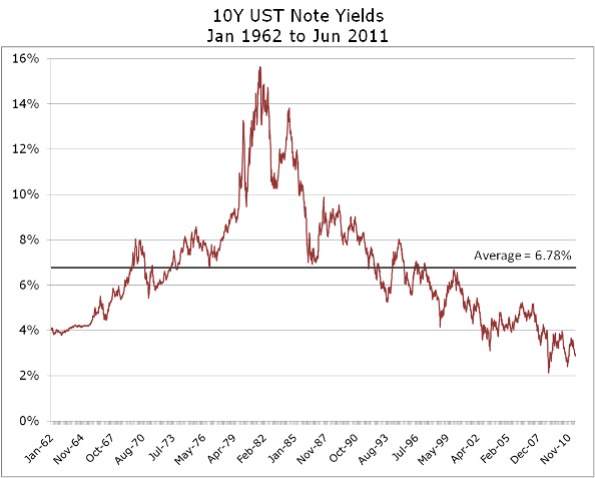

Time horizon - the longer the horizon of analysis, the more likely a subjective analysis will be more relevant.

Rarity of event - the more rare the event, the more the analysis calls for a Bayesian/subjective approach.

Time homogeneity of data - Were there no regime changes or other tectonic shifts in the underlying phenomena from which data was gathered? If so, analysis will tend more towards frequentist methods.

So for long time horizons, a scarcity of data, significant changes through time in the realm in which the data lives, and highly improbable events, we land squarely in the realm of subjective probabilities. Though historical/frequentist data isn't ever completely irrelevant, in these circumstances professional judgment of the situation at hand trumps pure number crunching. Unfortunately, from rating agencies to regulators to a large swath of finance professionals, this is not well understood. Things are just much more clean and simple if we allow ourselves to believe that 100 data points and a fancy model will yield 99.97% confidence precision. This is a particularly dangerous type of belief in finance, as acutely borne out over the last 18 months.

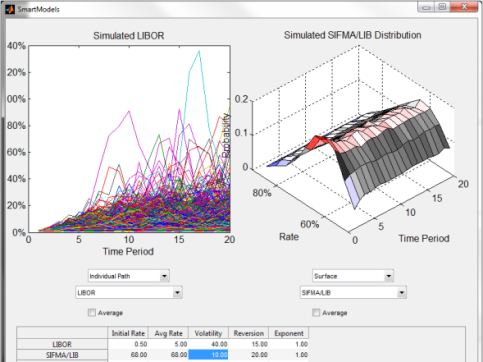

The good news is that whether frequentist or subjective, widely available probability-based models should always be used to capture risk metrics, evaluate best and worst outcomes, assess breakevens, and ultimately to avoid the ever pervasive flaw of averages.